A Grain of Salt for FIRE Free Speech Rankings

Speech code is OP, Survey measurements seem noisy, and "Warning Schools" lead the pack on viewpoint diversity

The Foundation for Individual Rights and Expression recently released their annual College Free Speech Rankings, drawing on a large survey of currently enrolled students at more than 200 colleges.

In doing this, their clearly stated goal is to move people away from the bad schools and toward the good ones.

This is all fine, so far as I’m concerned. Schools need to be monitored and held accountable for things to improve. But looking over FIRE’s rankings makes me wonder… is anyone holding the monitors to account?

Speech Code Measures

FIRE has a lot of leeway in determining how to measure free speech on campus. They decide which questions make it into the survey, how to word them, and which ones count for more. Even seemingly inconsequential decisions can substantially change the answers you collect.

As I mindlessly scrolled FIRE’s website while procrastinating started my initial research, I discovered a feature of FIRE’s rankings that potentially allows even more scope to influence the results of their campus investigation. If you’re as bored as I was, you can find it too—at the very bottom of the survey’s home page.

It turns out that in addition to surveying students about how easy it is to discuss controversial topics like abortion or gun control,1 FIRE incorporates its own evaluation of written speech codes which works as follows:

Speech Code measures… determine whether college policies restrict student speech that is protected by the First Amendment. “Green” ratings indicate more open environments for free speech and give a college a higher score, but “Warning” and “Red” ratings show that colleges have policy environments that dampen free speech, and college scores are penalized.

Since FIRE has taken the trouble to collect so much data on the campus environment, we should be able to test just how important these written speech codes actually are. Do schools with “Green” speech codes consistently perform better on the other metrics they collect?

General Overview

A first look at the top of the rankings suggests that the answer is yes—20 of the top 25 schools earn a Green rating. Insofar as FIRE is drawing from a broad set of meaningful inputs to obtain their overall score measure, it’s reasonable to presume that the speech code rating goes hand in hand with other elements of a free campus environment.

If that were the case, we might expect nearly all Green schools to perform well on student reports. This is not what we observe.

A simple filter of the rankings shows that the bottom fifth of Green schools (6 out of 29 total) show less than stellar performance, scoring worse than 8 Red schools.

Similarly, some Red schools manage to overcome their rating to place quite high overall.

While even the best general rules tend to have occasional exceptions, this seems a little fishy to me. To get a better sense for the connection between speech code and overall performance, let’s take a look at a specific example.

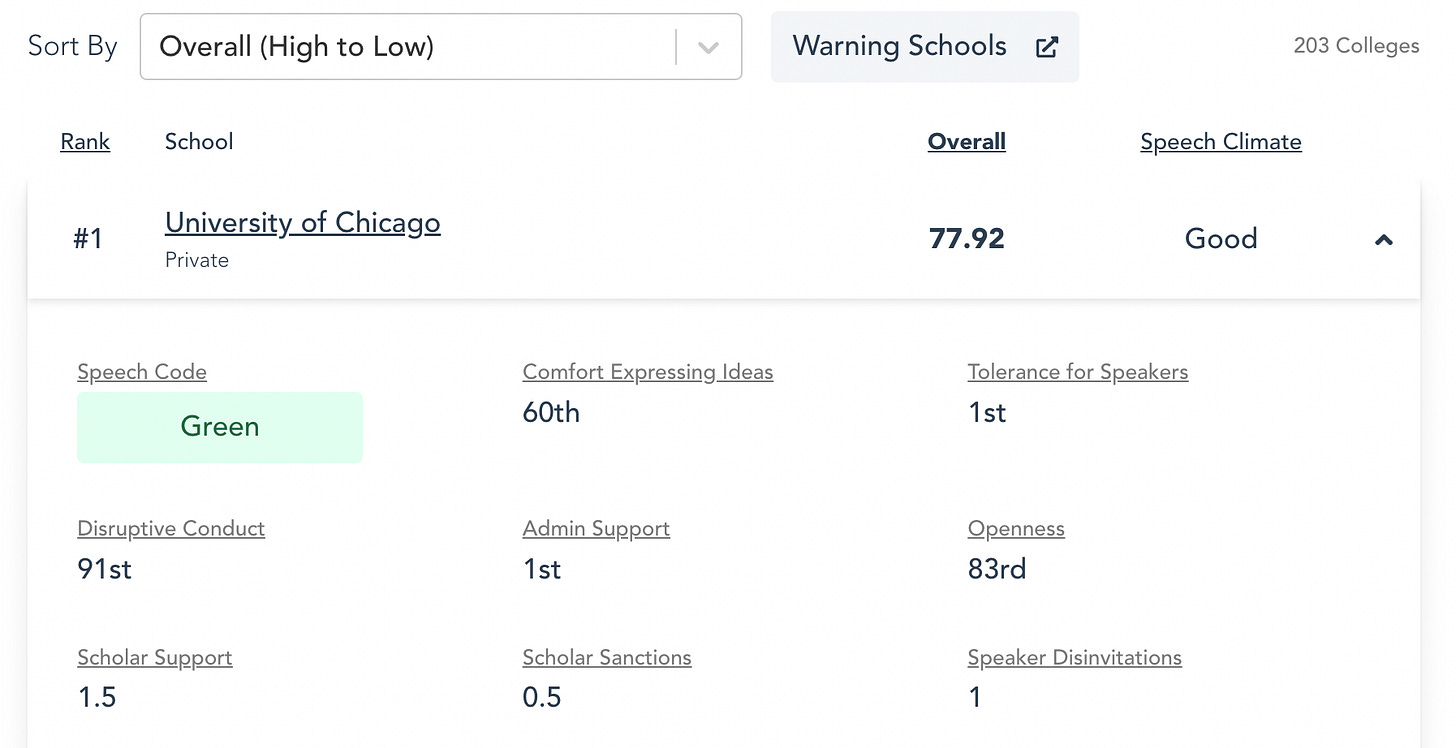

UChicago

No school better epitomizes FIRE’s vision of what a university should be than UChicago. Since 2015, much of their advocacy has centered around persuading less enlightened campuses to adopt the Chicago Free Speech Statement, which guarantees “all members of the University community the broadest possible latitude to speak, write, listen, challenge, and learn”. Unsurprisingly, Chicago earns a Green rating.

If you buy the “FIRE hypothesis”—my shorthand for the proposition that Green speech codes → all-around better campus environments—it should also be unsurprising that Chicago scores first overall. C’mon people, they’re the best.

But most of these metrics are far from impressive. Despite the anecdotal examples you hear of Chicago standing by controversial scholars, they show as many sanctions and disinvitations here as instances of support. Surveyed measures are similarly mixed, with rankings of disruptive conduct (e.g. is violence toward speakers acceptable?) and openness coming in not far from the median.

While seeing the school everyone already knows to be the model of free speech at the top inspires a lot of initial confidence for casual observers, it’s quite possible that points awarded by FIRE’s speech code assessment are driving the high ranking we see for Green schools instead of merely reflecting strong overall performance.

How is Overall Score Calculated?

At this point I’m wondering how much weight FIRE assigns to their speech code assessment relative to other measures. Fortunately, they spell this out transparently on their website.

Abstracting a bit from some of the details, here are the basic steps:

Step 1:

Combine the six surveyed components according to their relative weights. Comfort expressing ideas and speaker tolerance receive the most weight, while disruptive conduct and administrative support receive the least (roughly a third of the largest categories).2

Step 2:

Apply rewards and penalties for administrative handling of speech controversies— point increments here seem relatively marginal to me so I’ll mostly skip over this.

Step 3:

Standardize school scores to obtain an average of 50 and a standard deviation of 10. Note that Warning schools are not included here and are instead standardized relative to their own group. More on this later.

Step 4:

Incorporate speech code awards/penalties:

+1 standard deviation for Green

-.5 standard deviation for Yellow

-1 standard deviation for Red or Warning

In Sum:

Overall Score = (50 + (ZRaw Overall Score)(10)) + FIRE Rating3

After processing all of this, I am now much more worried about the speech code measure driving the overall results. If two schools score identically well on the basis of student surveys and concrete instances of support or censorship, they can still differ by as much as 20 points using this methodology.

Is Speech Code Overpowered?

Given their marketing, I would never have guessed that so much of the ranking comes down to FIRE’s own subjective assessment of what constitutes a good speech code. A movement of 20 points closes 30% of the difference between top ranked Chicago and bottom ranked Columbia!

Still, we’ve only scratched the surface of what’s going on here. Let’s assess the extent of the issue by comparing similar schools that differ on the speech code dimension.

Mississippi State v. Oklahoma State

Both of these schools land in the top five, with nearly identical scores. But only MSU earns the superior Green rating.

What does the Green rating buy you in FIRE’s rankings? Apparently a lot. MSU is substantially worse on every single survey metric!

Let’s look at a few more.

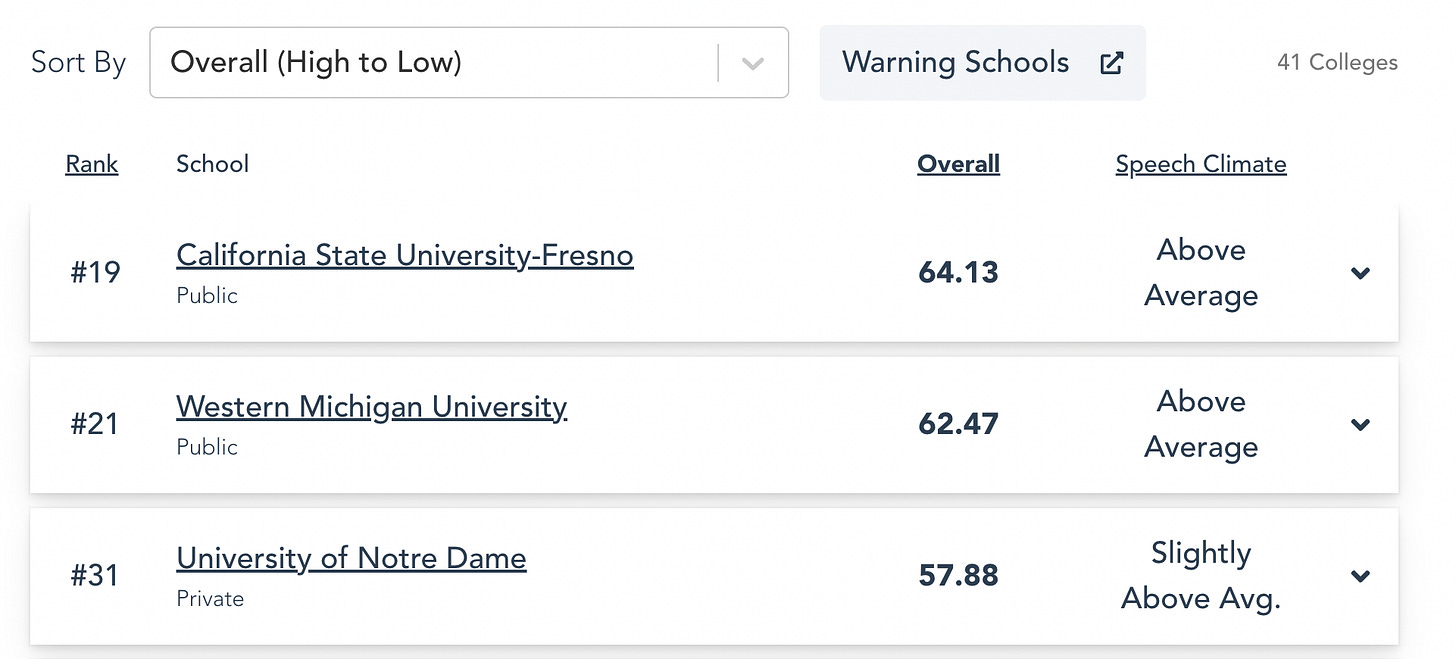

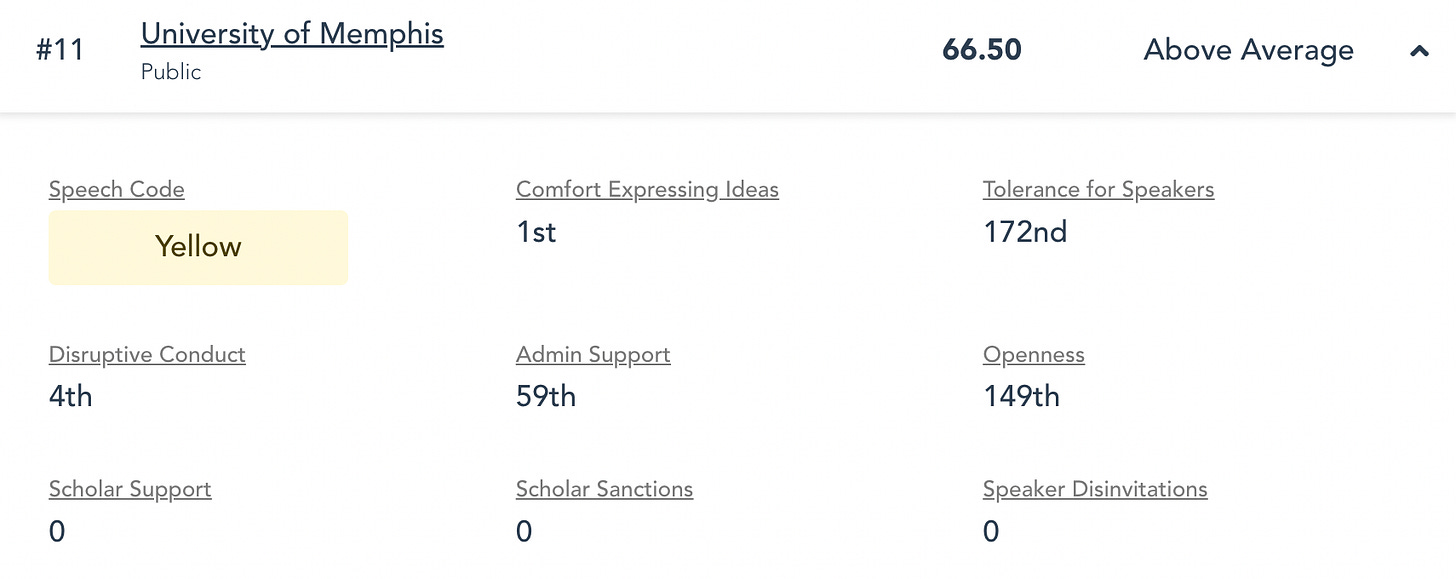

Oregon State vs. University of Memphis

Again the lower ranked school has an edge on most of the survey categories. But while this matches the pattern I’m looking for, it’s actually more troubling to me to see so much divergence among these separate measures.

At first glance comfort expressing ideas seems like it should be pretty related to openness, but Memphis tops one while performing abominably on the other. Similarly, disruptive conduct is outstanding while tolerance for speakers approaches the worst of the pack. Is it really possible to find students that hate provocative speakers this much but would never condone protesting them?

UNC Charlotte vs. Arkansas State

Again we’re seeing incoherent results from the Yellow-rated school, despite relatively strong performance in the head to head comparison. This leads me to suspect that FIRE’s survey measures are not particularly robust.

But before we tackle this possibility directly, let’s examine what might be the most egregious feature of the FIRE ranking methodology.

Warning Schools

So far I’m conflicted. Speech code is doing a lot of the work here, but the survey measures don’t seem reliable enough to convince me that FIRE has clearly superior options. Should I let them off the hook?

I would consider that conclusion more seriously if it weren’t for FIRE’s special mistreatment of warning schools. Based on their methodology, they classify five schools as inadmissible for consideration alongside the rest of the list.

Seeing as these schools all have religious commitments that supersede their commitment to free speech, it certainly seems fair for FIRE to penalize them relative to schools that are all-in on free speech absolutism. But does having other specified priorities warrant being relegated to an entirely distinct underclass?

I don’t think this holds water even conceptually. In other contexts, we often seem perfectly willing to acknowledge high performance on its own merits despite other constraining priorities possessed by the actors involved. If Bill Gates saves millions of lives philanthropically while maintaining formal commitments to Microsoft’s shareholders, are we justified in dismissing his contributions? In a similar vein, most people can simultaneously grant that religious institutions are committed to certain spiritual observances first and foremost and that they deserve some degree of credit for helping people with secular problems.

In short, I think that there’s a strong a priori case for allowing some modicum of tolerance toward institutional approaches at odds with FIRE’s preferred framework. Assuming that FIRE believes campus survey metrics add value beyond their own subjective assessment, it should be possible to compare so called warning schools against their main list counterparts in some meaningful sense.

Pepperdine vs. UChicago

While separate categorization makes the component aggregates and overall scores non-comparable between warning and non-warning schools, headline findings of raw survey results should give us a rough sense for how they stack up.

To avoid cherry-picking, let’s first compare the #2 warning school to the #1 school overall. (Hillsdale is too much of an outlier with a 10.3 conservative to liberal ratio.)

I think Pepperdine does ok here. Granting that these are only the (FIRE curated) highlights, the Warning school only performs meaningfully worse on the self-censorship category.

Even this substantial gap is far from a slam dunk victory for the FIRE favorite. Given their much higher liberal to conservative ratio, it’s hard to say if this reflects a better speech environment or if there are simply fewer conservatives to report being intimidated. While it is common to instinctively deride religious schools as conservative echo chambers, conservatives are actually a minority of students at Pepperdine and partisan donations by faculty strongly favor progressive candidates.

To address this concern, let’s take a look at the highest ranked school with a similar campus size and lib-con ratio.

Pepperdine vs. Bucknell University

Bucknell (#48) is a private university with the same enrollment as Pepperdine (~3600) and a slightly higher liberal to conservative ratio at 2:1. On highlighted survey measures, they seem basically interchangeable.

After these comparisons, the stated justification for separate warning schools appears tenuous at best. Pepperdine compares favorably to non-religious private universities and is not obviously horribly worse than top ranked Chicago. Another relevant comparison might be Notre Dame, which manages to rank at #31 despite a Red rating and the accompanying 10 point penalty. To me, this feature of FIRE’s rankings just seems wrong.

The Elephant in the Room

Most of the analysis that I’ve presented here presumes that FIRE survey metrics are meaningful. But what if they aren’t?

While rigorously evaluating statistical constructs is above my pay grade, we might be able to scrutinize the speech code component of FIRE’s rankings using simple measures of revealed preference. Do students from across the ideological spectrum feel comfortable enough to attend colleges FIRE considers the worst of the worst?

Viewpoint Diversity Rankings

Thanks to Eric Kaufmann at CSPI, there’s a really convenient way to assess how frequently ideologically diverse students choose to actually attend Warning schools in the data. Using FIRE’s own data on self-identified ideology, Kaufmann ranks 159 of the schools surveyed according to viewpoint diversity on campus. While it’s not a perfect measure,4 the results are still pretty unflattering for FIRE’s current ranking system.

Baylor, BYU, and Pepperdine all finish in the top 30 for viewpoint diversity while FIRE’s top ranked Warning school comes in close to last.

This is really bizarre. Why would FIRE completely ostracize these schools when revealed preference and their own survey measures show that they tend to have reasonably decent campus environments?

My Hypotheses

If I’d had a little more forethought, I probably could have reached out to FIRE and presented this question to them. They seem like reasonable people and I am not in any way trying to malign their motives or intent. As an unusual blogger person, I think free speech is really great!

But since I have real life work to do and various guest posts I owe to other people, I’ll close with my own hopefully not too wild speculation.

Here’s what I think is happening:

FIRE has very firm commitments to a particular vision of liberal education, which is highly tied up in their specific advocacy around speech codes. Since they feel these written codes are important and already invest a lot into them, they want that work to be reflected in the rankings somehow.

Collecting reliable survey measures is really hard. Even if you create the perfect survey for the main institutions you’re interested in (i.e. the all-powerful Ivy league), skrewy things can happen in other contexts. To prevent too many screwy things from happening, FIRE made the understandable decision to assign a lot of weight to their own speech code measures.

Hillsdale in particular is a really weird school. If we went by survey metrics alone, they would look really awesome. Even with the substantial FIREpower of the standard speech code penalties, they were going to need a bigger boat.

It’s fun to imagine the data team wringing their hands over this issue. While they may have tried a thousand minute variations on their weighting methodology to rationalize this major outlier within the existing framework, their ultimate response probably boiled down to “nope, we’re not letting Hillsdale rank side by side with Chicago”. Rather than simply drop them from the data, they decided to pull in a few other schools from the Red speech code category and call them all “Warning” schools.

Most people took this seemingly minor decision at face value instead of obsessively hashing it out over a 3000 word blog post.

Bottom Line

FIRE bills their rankings as primarily a reflection of student voices on campus, as reflected in the opening lines of their most recent release announcement.

The largest survey on student free expression ever conducted adds 45,000 student voices to the national conversation about free speech on college campuses — and finds that many are afraid to speak out on their campus. Many others want to silence the voices of those who don’t share their viewpoints, creating campus echo chambers.

But a simple inspection of FIRE’s ranking methodology suggests this isn’t really the case. Schools with speech codes that FIRE likes receive a large boost relative to schools that don’t while some otherwise commendable schools are unfairly labeled as beyond the pale. FIRE could improve their rankings on the margin by simply removing Hillsdale as an outlier and abolishing the dubious and overly punitive “Warning school” category they currently apply to 4 non-outlier religious schools.

For anyone who’s tempted to come away from this with an overly negative picture of FIRE, please remember that this kind of thing is inherently super difficult. There is no perfect measurement for the abstract category of “free speech”, and FIRE’s attempt to quantify this important issue appears to be significantly better than the previous alternatives their rankings replace.

Also I’m just some guy on the internet. If by some chance FIRE finds this article, I’m happy to edit this post to include their response to this in their own words below.

Until then, take my words and FIRE’s rankings with a grain of salt.

EDIT (10/7): FIRE responds!

More accurately, there are six distinct surveyed components that factor into the FIRE rankings, which is mostly inferable from context later in the post. Here is the list:

Openness to discussing challenging topics on campus

Tolerance for allowing controversial Liberal speakers on campus

Tolerance for allowing controversial Conservative speakers on campus

Administrative Support

Comfort Expressing Ideas

Disruptive conduct

Again note that I am abstracting away from some of the details. Speaker tolerance consists of mean tolerance (obtained by taking the average of tolerance toward conservative and liberal speakers) and tolerance difference (obtained by subtracting the difference between conservative tolerance and liberal tolerance). A full description is available here.

Z denotes standard deviations from the mean raw overall score, FIRE rating denotes the standard deviation rewards/penalties based on speech code.

For instance, students can only attend schools they are admitted to which is determined partially by things like standardized test scores and GPA.

Thanks for this thoughtful review of, and improvement suggestions for, FIRE's campus free speech ratings!

"I probably could have reached out to FIRE ... If by some chance FIRE finds this article ..."

If you're interested in their having a look at this post, it probably wouldn't take too long to tag Adam Goldstein (@AdGo) on Twitter, FIRE's VP of Research.

In mid-August 2022, he responded forthrightly when @PNWSelina noted that FIRE's ideological scale characterizations of at least three speakers and their audience pushback at campus events could bear re-evaluation, and I shared her takes with Adam. See, for instance, https://twitter.com/AdGo/status/1560831788209119232.

I studied at a large Chinese university for a semester. Students who had VPNs (and/or students from HK), told stories about their peers being reprimanded and even disappeared for talking about certain issues such as feminism. The other students never told such stories. I wonder if the non-vpn students would rank their university highly on a FIRE survey.